Why is my crawl not crawling (and other uncommon crawl problems)?

Crawl may be the easiest way to extract structured data from entire web sites, but occasionally you might run into issues: either a crawl will fail to get off the ground, or will not crawl deeply enough for your needs.

Here are some common solutions to these (uncommon) problems.

Troubleshooting 101: Check the URL Report

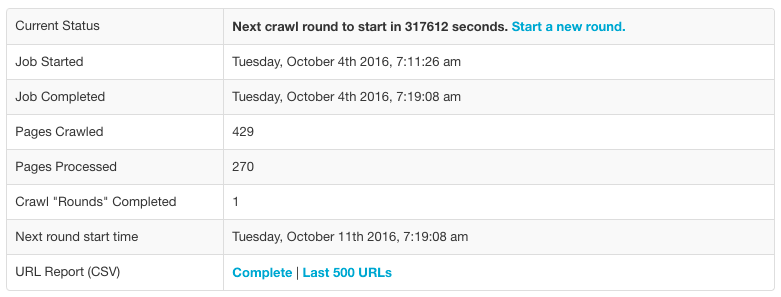

When in doubt, download your crawl’s URL Report. The URL Report is a log of each page encountered in a crawl, and provides useful debugging information: the exact actions Crawl is taking on each URL. The URL Report is available during and after a crawl.

If your crawl fails to start at all

If a crawl doesn’t crawl past the seed URL, check the following possible scenarios:

-

Check for robots.txt prevention: Make sure the site’s robots.txt file doesn’t prevent crawling of or beyond your seed URLs. The URL Report will indicate if a given URL is not able to be crawled thanks to robots.txt instruction, or you can check the site’s robots.txt directly at subdomain.domain.com/robots.txt. If this is the case, you can opt to “ignore robots.txt” within your crawl settings or via the Crawl API.

-

Does the site require Javascript? See if the site’s links are only available when Javascript is executed. (This may necessitate viewing the site’s HTML source in your browser. If no or few links are available: Javascript is probably needed.) Because Crawl does not execute Javascript by default, you’ll need to use our Javascript execution instructions to pursue these links.

-

Connection or other errors may require proxy IPs: If Crawl is unable to connect to the seed URL (“TCP error” or other failures in contacting the seed), the site may be blocking certain IP address ranges. In this case, try turning on proxies within your crawl dashboard or the Crawl API.

-

Make sure your crawling patterns aren’t too restrictive: Crawl will only crawl pages that match specified crawling patterns. If your patterns don’t match any of the links from your seed URLs, Crawl won’t be able to follow links into the site. Read more on crawling vs processing.

If you’ve determined any of these issues is the culprit, a restart of your crawl after making the appropriate change should do the trick.

If your crawl is crawling, but isn’t processing pages correctly

Sometimes the crawl is proceeding, but pages aren’t being processed, or data isn’t appearing in your downloaded JSON/CSV. Some potential reasons:

-

Make sure your processing (or crawling) patterns aren’t too restrictive: If you’ve entered any processing patterns or processing regular expressions, only matching pages will be sent to the specified Extract API. Make sure that these patterns aren’t too restrictive. If you’ve entered crawling patterns only (no processing patterns), your crawling patterns will equally apply to processing – so be sure that your crawling patterns apply to the pages you want processed.

-

If you’re using the Analyze API, make sure pages are being correctly identified: The Analyze API automatically identifies articles, products, images, discussions and other pages. It’s very good, but occasionally it may mistakenly classify a page. If pages are being correctly processed (according to the URL Report) but data is not available, it may be an Analyze mis-classification. In this case you can use the Custom API Toolkit to make a manual override, or contact [email protected] for assistance in improving Analyze API page identification.

Again, restarting your crawl after making the appropriate change should do the trick.

If your crawl data is incorrect

If pages are being correctly processed but some of the fields are incorrect — for example, the offerPrice is off, or your extracted article is missing the introductory text — you can fix this using our Custom API Toolkit. The Custom API allows you to make site-specific, individual field overrides (or add any field you like to our existing APIs).

Changes made via Custom API will not apply to already-extracted pages. But a crawl restart will accommodate any changes you have made.

If your crawl won’t stop

Sometimes, due to site (mis-)configuration, a site will dynamically generate pages ad infinitum. In these cases Crawl may crawl these dynamically generated pages until it hits a crawling limit (maxToCrawl). Read about controlling a “neverending” crawl.

If you have other crawling problems or questions, contact us at [email protected].

Updated 3 months ago